VR Interventions for Veteran Social Anxiety

Developing and evaluating VR exposure therapy for student veterans, from rapid prototyping to multimodal assessment of treatment efficacy.

Student veterans face unique challenges when transitioning to college life, with social anxiety and PTSD being significant barriers to their success and well-being. This research project focuses on developing, prototyping, and evaluating accessible, effective Virtual Reality Exposure Therapy (VRET) to help veterans manage symptoms of social anxiety in a controlled, safe environment.

Our work follows an interdisciplinary approach, combining social work, computer science, and communication design to create interventions that are not only clinically sound but also technologically robust and user-centered. The project spans the full lifecycle from understanding user needs to developing novel evaluation methods using physiological signals and natural language processing.

Understanding the Need and Prototyping Solutions

Our initial research involved qualitative studies with student veterans to understand the specific triggers and environments that provoke social anxiety (Trahan et al., 2019). Crowded, unpredictable public spaces like university quads, classrooms, and grocery stores were identified as key challenges.

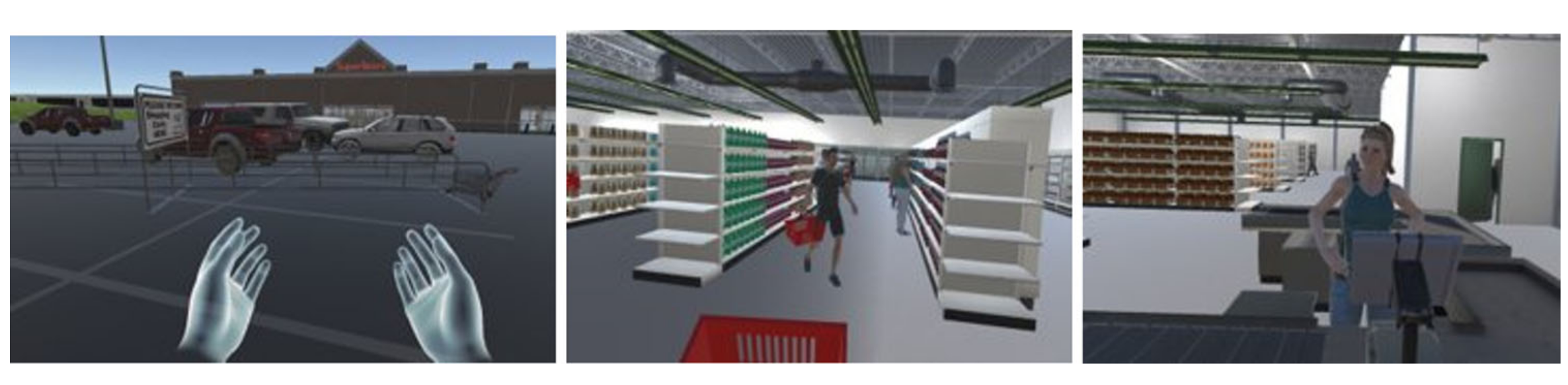

Based on these insights, we developed a rapid prototyping process for creating VR interventions (Metsis et al., 2019). This process allows us to quickly validate hypotheses and gather user feedback before investing in full-scale 3D development. A core part of this research was a comparative study between two immersive technologies: 360° video and fully interactive 3D virtual reality (Nason et al., 2019). We found that while 360° video offers high realism, it lacks the interactivity and user control that is crucial for therapeutic exposure. In contrast, 3D VR provides this control, allowing users to navigate and engage with the environment, which proved more effective despite lower graphical fidelity.

Evaluating VR Efficacy with Emotion and Language Analysis

A major challenge in mental health research is objectively measuring an intervention’s effect. To address this, we developed a multimodal evaluation framework combining subjective self-reports with objective physiological and behavioral data.

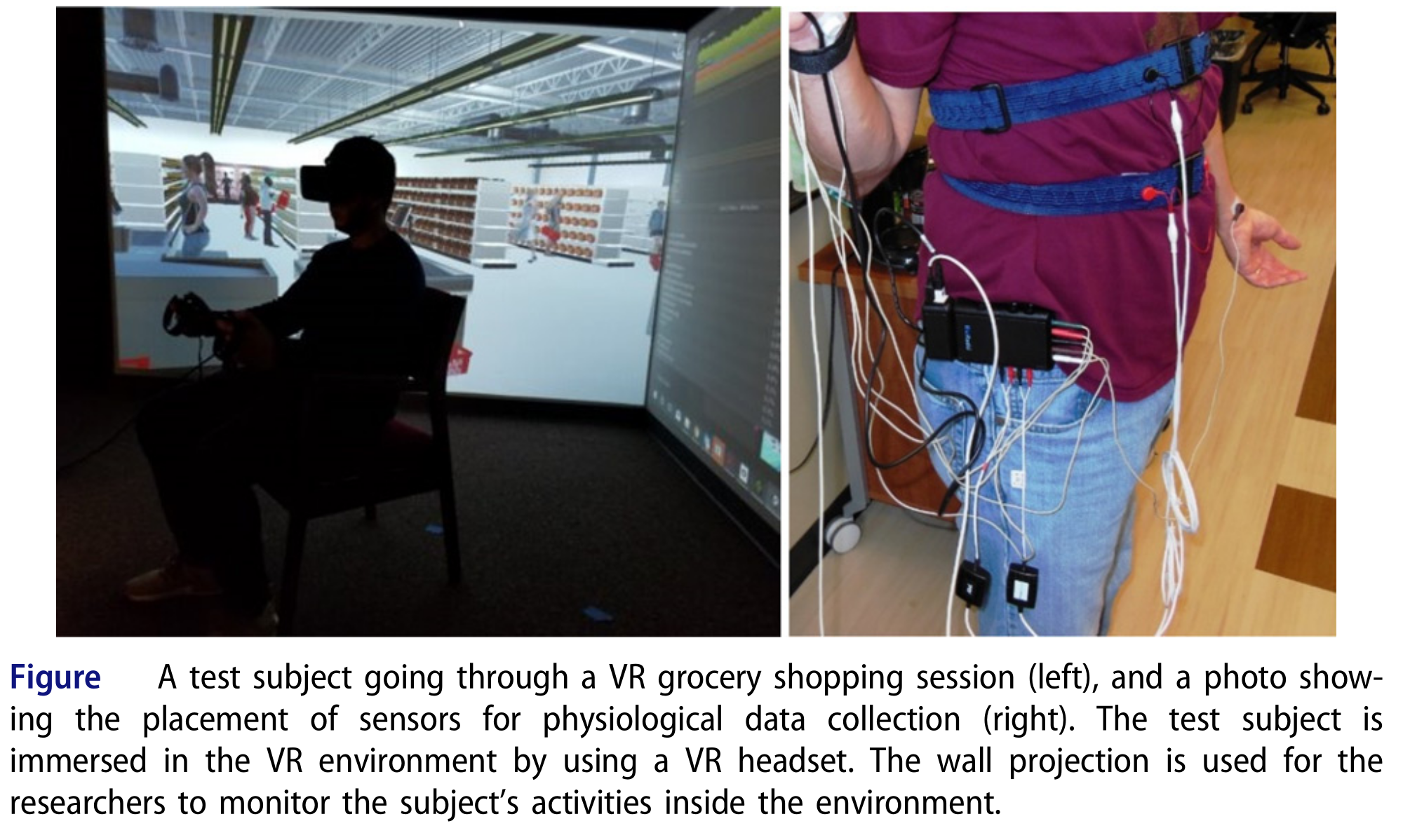

We used a variety of non-invasive biosensors to measure emotional arousal during VR exposure (Anderson et al., 2017), (Hinkle et al., 2019). By tracking signals like Galvanic Skin Response (GSR), heart rate (ECG), and brain activity (EEG), we can obtain an objective, real-time measure of a user’s stress response to specific stimuli within the virtual environment. A case study combining VRET with EEG analysis demonstrated that the intervention not only reduced PTSD symptoms but also led to measurable changes in the brain’s neural connectivity (Trahan et al., 2021).

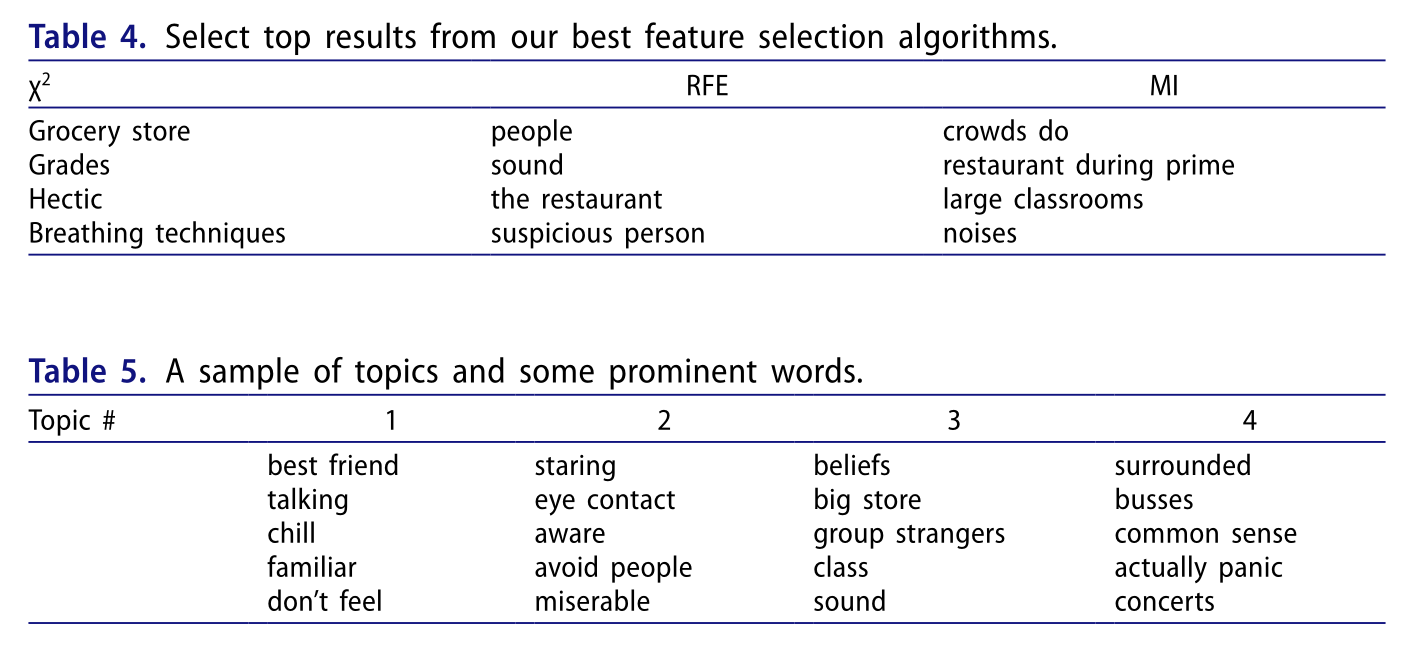

We also pioneered a novel method for detecting the intensity of anxiety from language. Using text analysis and machine learning on transcribed interviews with veterans, we were able to classify the level of anxiety expressed in their own words (Byers et al., 2023). This provides another valuable, objective layer of data for assessing treatment progress.

This research has also been extended to other high-stress domains, such as developing a VR and Augmented Reality (AR) training platform for first responders of a bus-sized ambulance, demonstrating the broad applicability of our prototyping and evaluation methodologies (Koutitas et al., 2019).