Multi-Modal Fall Detection -- From Vision to Personalized Wearables

A research project exploring robust fall detection, first using viewpoint-independent depth cameras, and later with personalized, deep-learning models on smartwatches.

Falls are a leading cause of injury and death among older adults, making automated, real-time fall detection a critical area of research for assistive technology. Our research tackles this problem through two distinct but complementary modalities. We first developed a robust, vision-based system for in-home monitoring using depth cameras. Subsequently, to create a solution that is mobile and user-centric, we explored the potential of ubiquitous personal devices, leading to our work on smartwatch-based detection which culminates in a fully personalized, adaptive system.

Viewpoint-Independent Vision-Based Detection

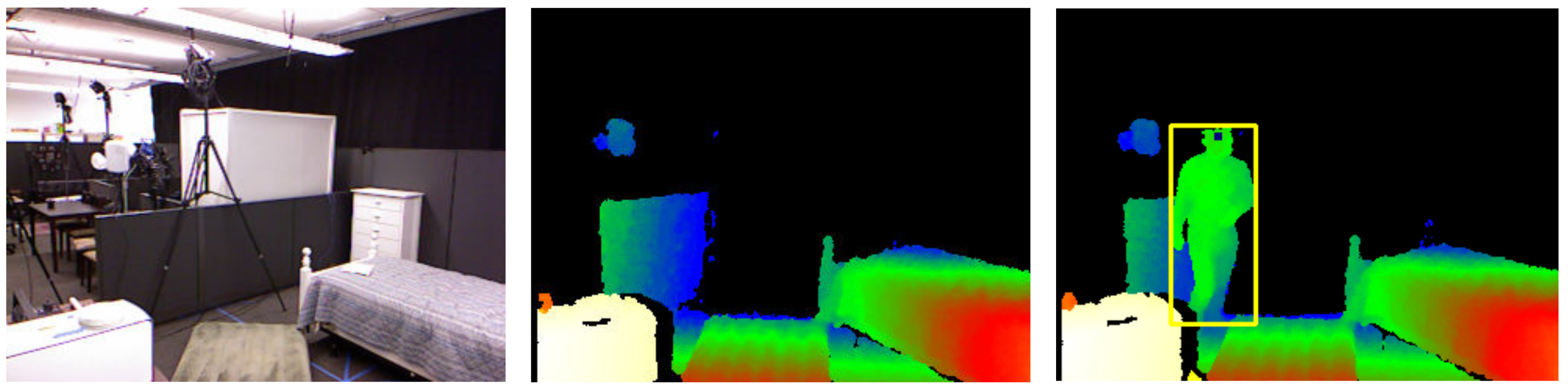

Our early work in this area focused on creating a reliable, less intrusive system for in-home environments using Kinect depth cameras. We introduced a statistical method that, unlike other vision-based systems of the time, is viewpoint-independent and does not rely on hardcoded thresholds (Zhang et al., 2012).

The system works by first performing background subtraction on the depth stream to isolate the person. It then tracks the person’s head and extracts five novel features related to its movement (e.g., total head drop, maximum speed, duration). These features are combined in a Bayesian framework to probabilistically determine if a fall has occurred. A key contribution was our evaluation protocol, where the system was trained on data from one camera viewpoint and successfully tested on a completely different one, demonstrating true robustness to camera placement.

Wearable-Based Detection with Deep Learning

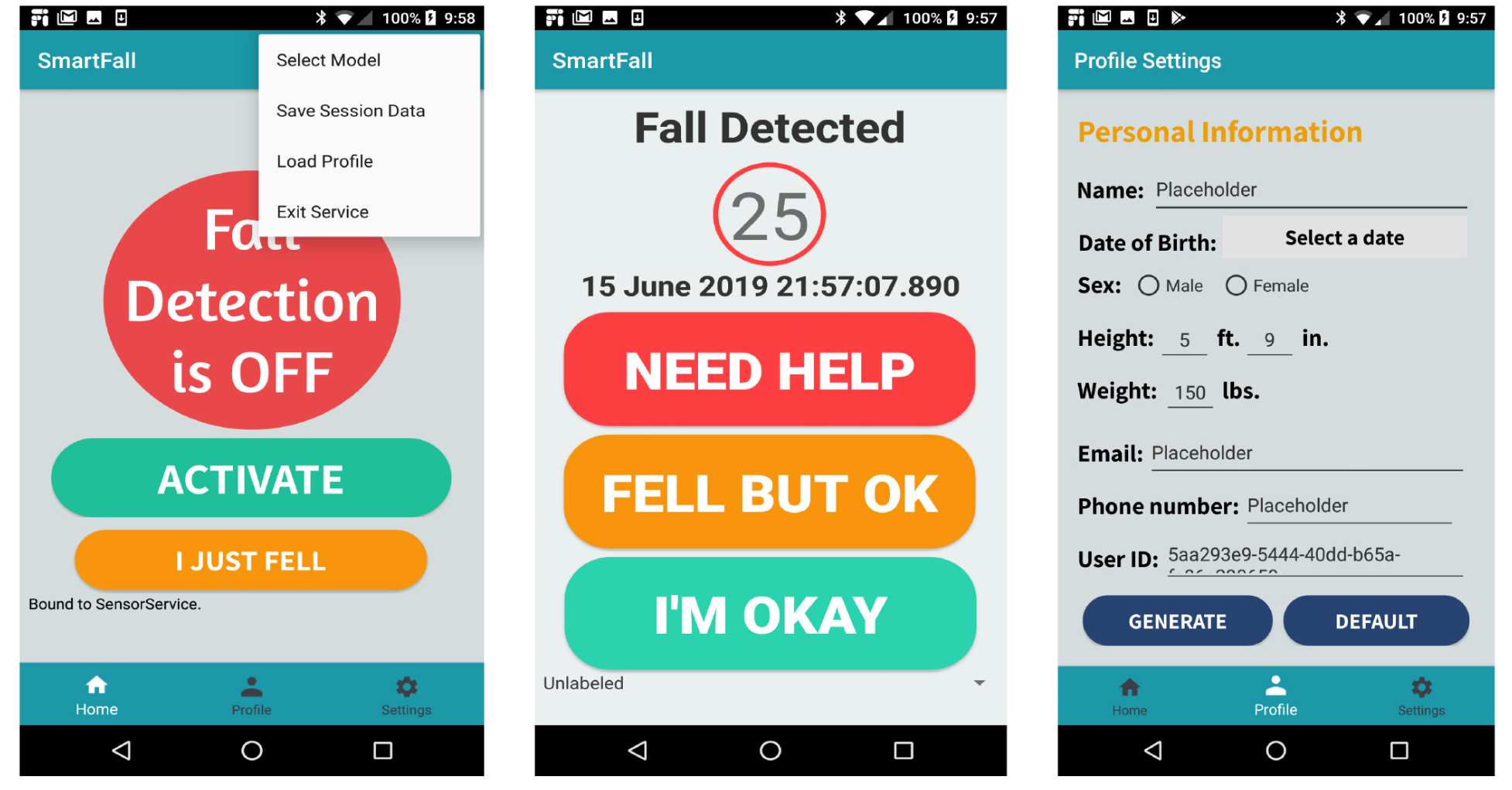

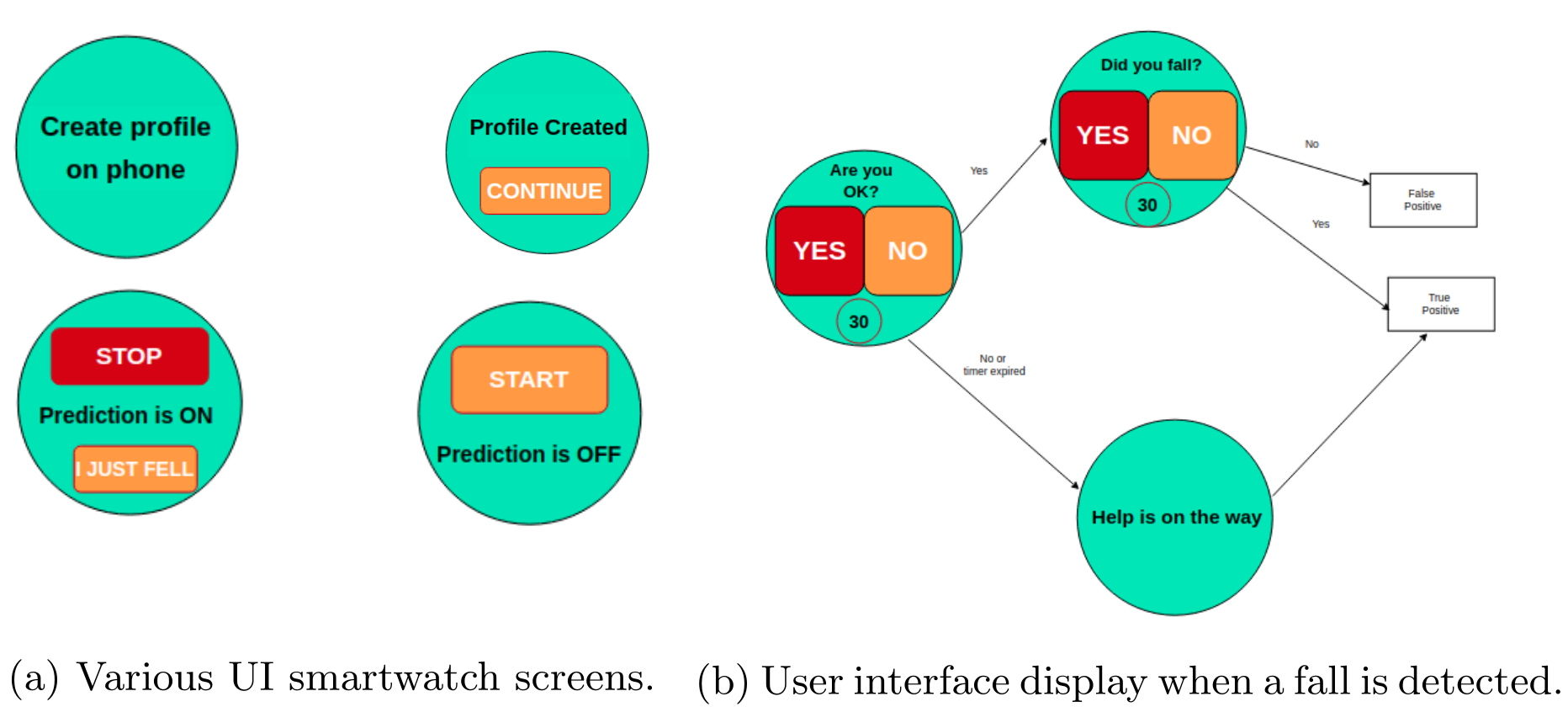

To create a solution that is mobile and works outside a fixed environment, we shifted our focus to wearable devices. This led to the development of the SmartFall system, an Android application that uses accelerometer data from a commodity smartwatch to detect falls in real-time (Mauldin et al., 2018).

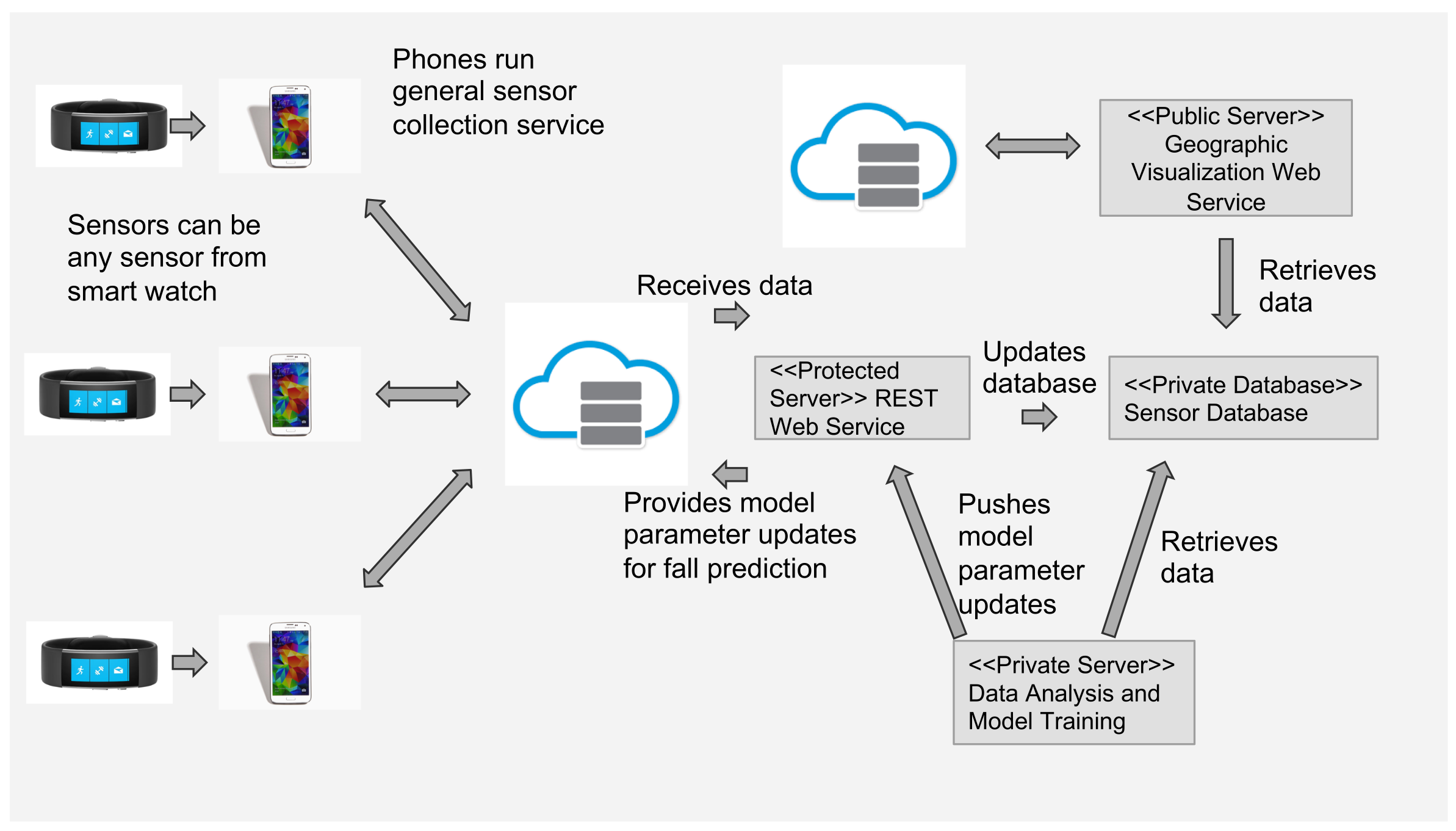

The system is based on a three-layer IoT architecture where the watch acts as the edge sensor, a paired smartphone performs the computation, and a cloud server provides back-end services. We demonstrated that a Deep Learning model, specifically a Recurrent Neural Network (RNN), significantly outperforms traditional machine learning approaches because it can automatically learn subtle, temporal features directly from the raw sensor data.

A key challenge in this domain is the scarcity of large, annotated datasets. To address this, we explored Ensemble Deep Learning techniques (Mauldin et al., 2019), (Mauldin et al., 2020), showing that an ensemble of smaller, diverse RNN models consistently outperforms a single, larger model.

Personalization via Edge-Cloud Collaboration

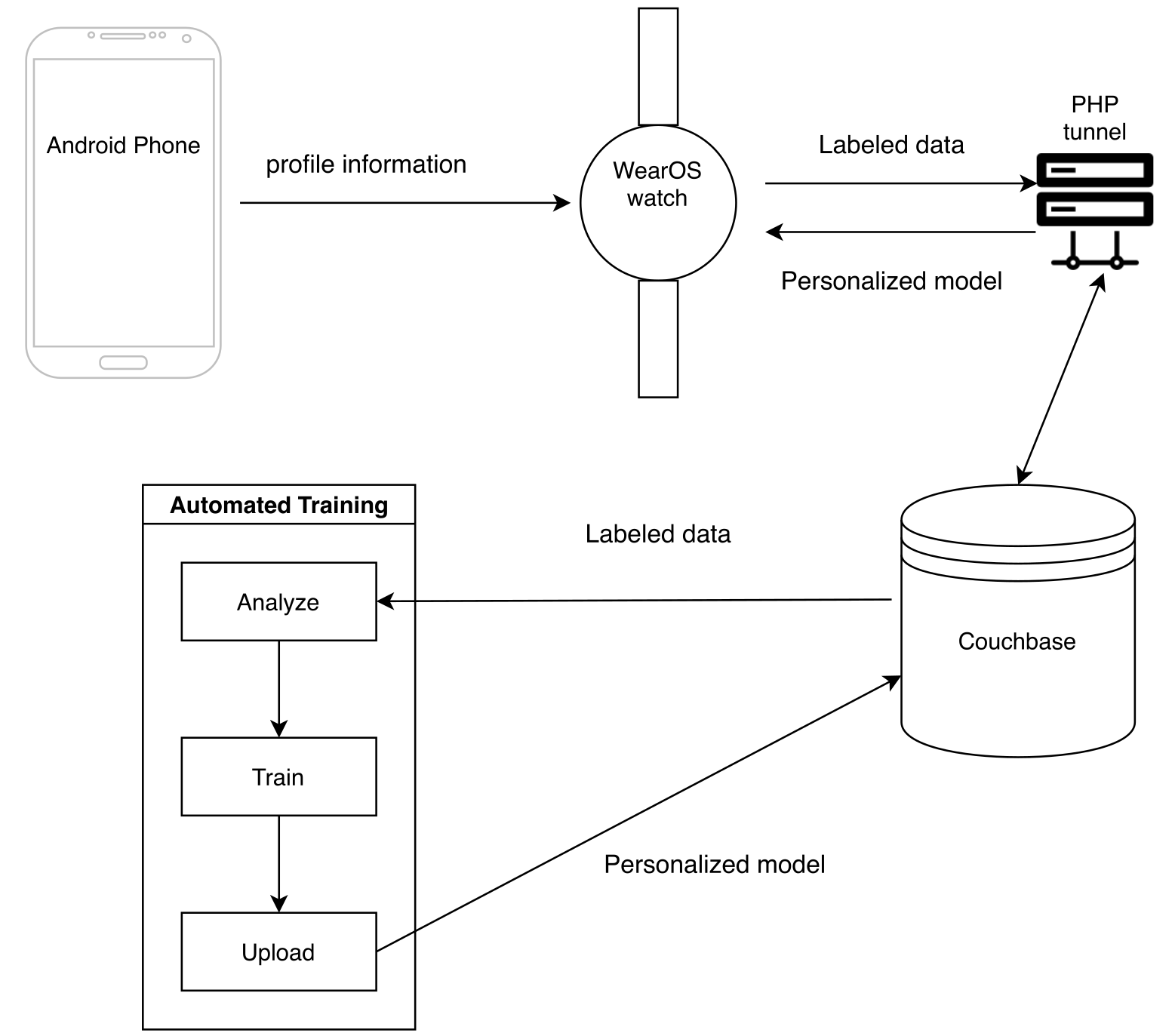

Finally, recognizing that a “one-size-fits-all” model is not optimal, our latest work introduces a collaborative edge-cloud framework for personalized fall detection (Ngu et al., 2020), (Ngu et al., 2021).

In this advanced architecture, the fall detection logic runs directly on the smartwatch. When a false alarm occurs, the user provides feedback through a simple on-watch UI. This labeled data is securely synchronized with a cloud backend, which automatically triggers a personalized re-training pipeline. The new model, now tailored to the user’s specific movement patterns, is pushed back to the watch. This automated feedback loop allows the system to continuously adapt and improve its precision, dramatically reducing false positives while maintaining high recall. This work is grounded in our broader research into robust IoT middleware architectures (Ngu et al., 2016).

Right: The simple user interface on the smartwatch for providing feedback after a potential fall is detected.