Technology-Enhanced Physical Rehabilitation

A research program on computer-aided rehabilitation, from Kinect-based avatars and haptic robotics to multilevel frameworks for human motion analysis.

Physical therapy is a cornerstone of recovery from injuries that impair motor function, such as those resulting from stroke, accidents, or chronic conditions like Rheumatoid Arthritis. However, traditional rehabilitation can be tedious, and patient adherence to prescribed routines is often low. This project focuses on the development of novel cyber-physical systems that enhance traditional therapy by making it more engaging, providing rich real-time feedback, and enabling quantitative assessment of a patient’s progress.

Our work explores a spectrum of technologies, from low-cost, vision-based systems using the Microsoft Kinect to advanced, guided therapy using haptic robotic arms. A core component of this research is not just the creation of these interactive systems, but also the development of sophisticated methodologies for analyzing the complex human motion data they generate.

Kinect-Based Rehabilitation with Avatars

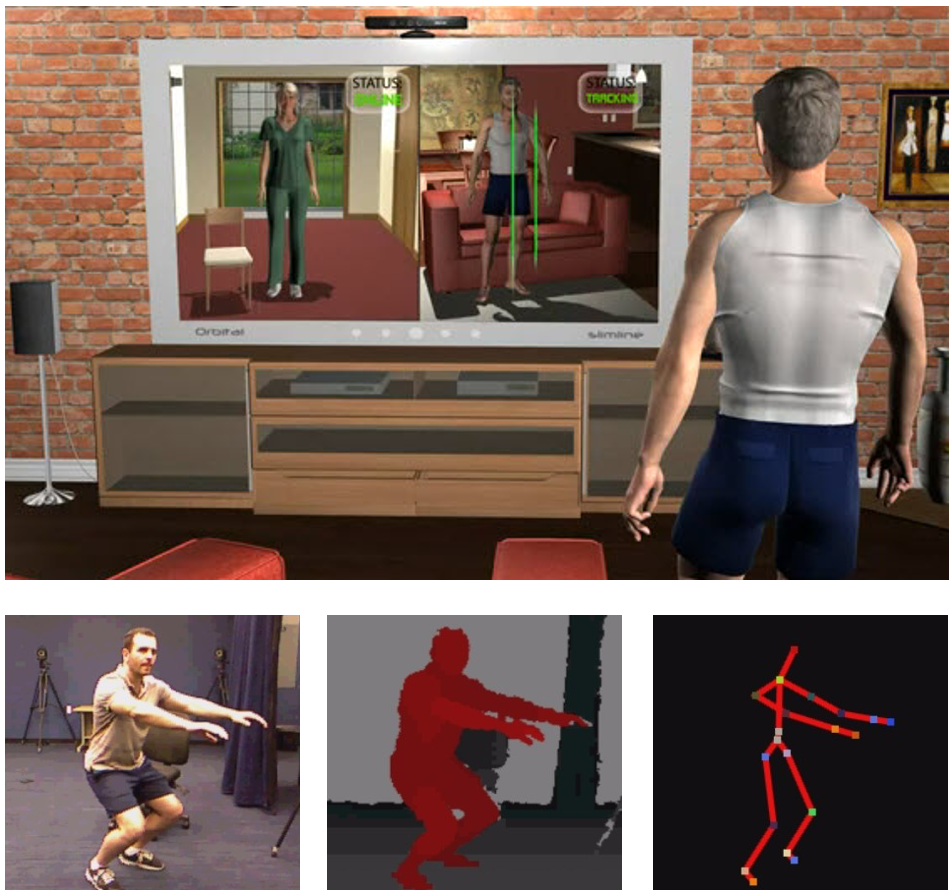

One of the key barriers to in-home rehabilitation is the cost and complexity of equipment. Our research explored the use of the low-cost Microsoft Kinect sensor to create accessible and motivating therapy exercises (Metsis et al., 2013). We developed a system where a physical therapist’s movements are recorded by the Kinect and mapped onto a 3D avatar. This avatar can then guide the patient through their exercises in a more engaging and human-like way, without the therapist needing to be physically present (Ebert et al., 2015).

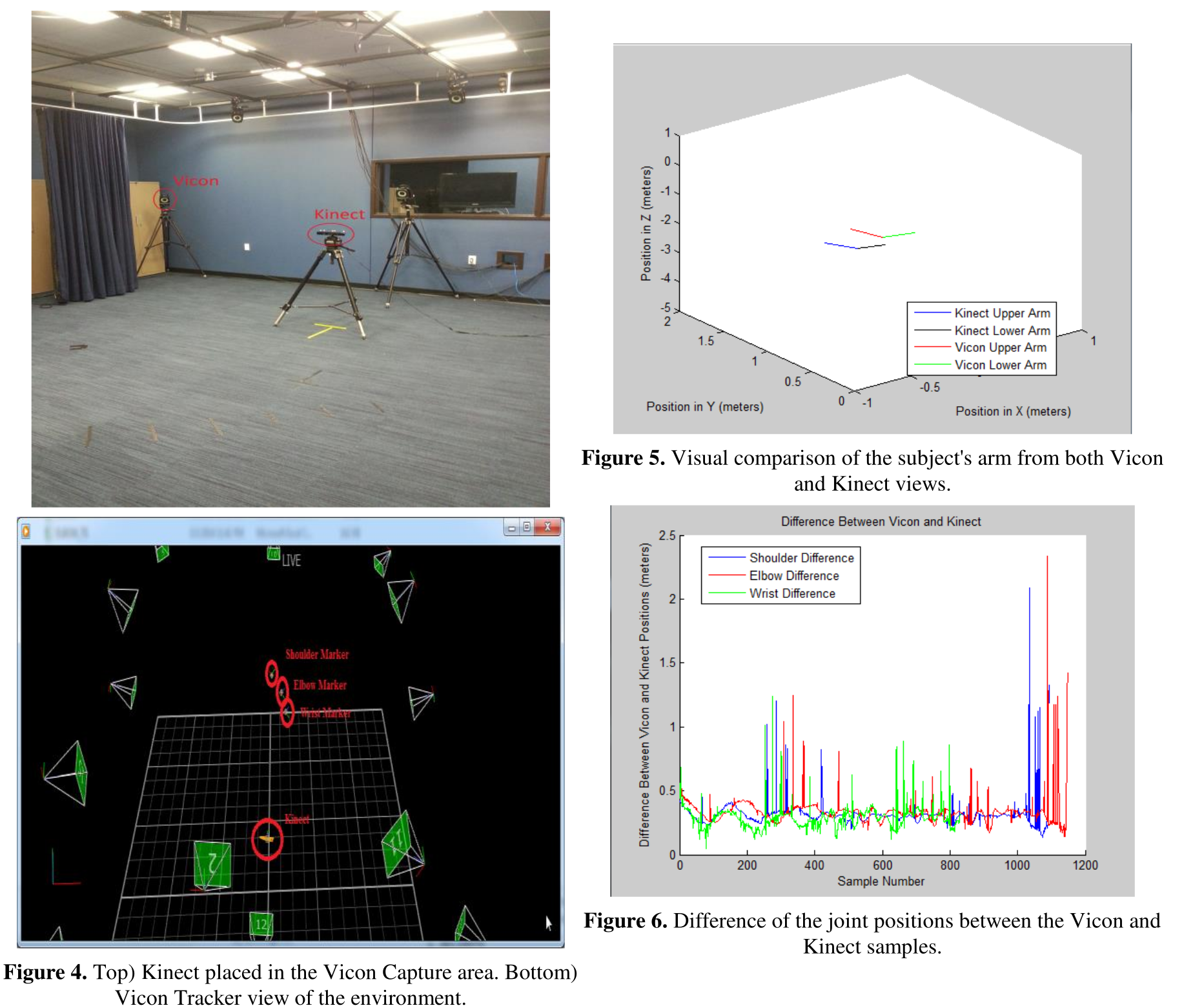

To ensure the clinical viability of this approach, we performed a quantitative evaluation of the Kinect’s skeleton tracker against a gold-standard Vicon motion capture system. This validation work confirmed the accuracy of the Kinect for tracking rehabilitation exercises, establishing its potential as a reliable tool for this application (Gieser et al., 2014).

Guided Therapy with Haptic Robotics

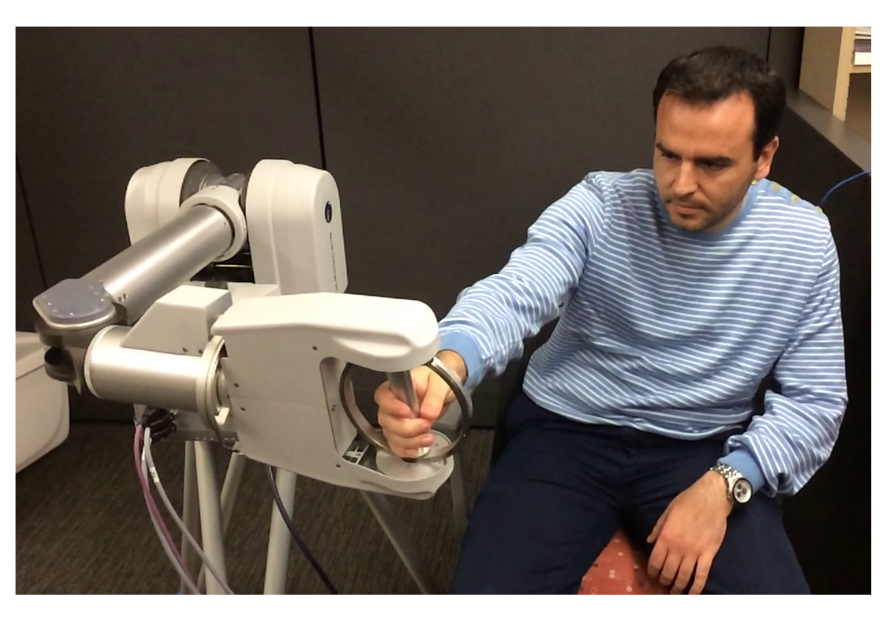

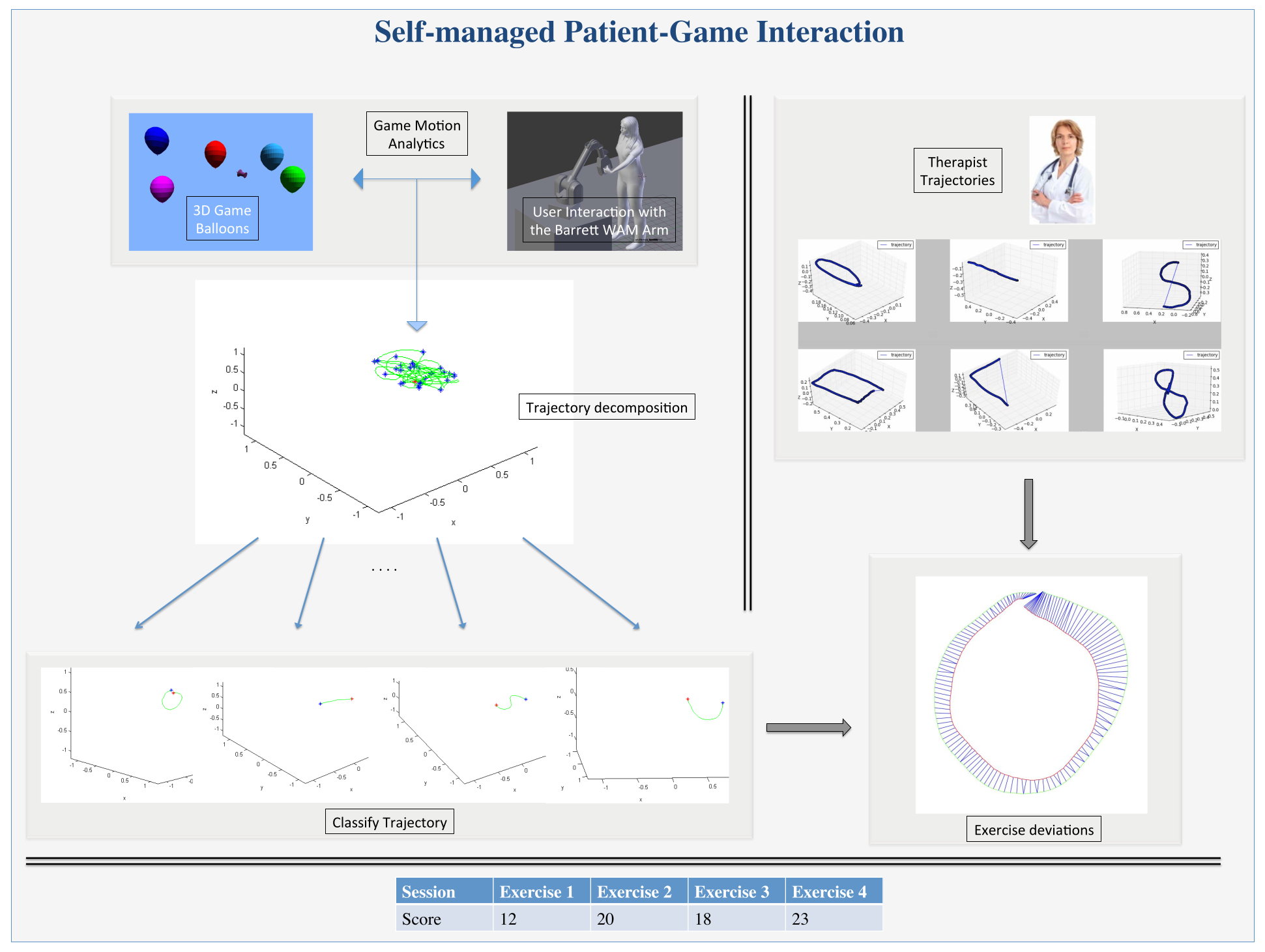

For patients requiring more direct physical guidance, we investigated the use of the advanced Barrett WAM robotic arm. This work presents a novel hybrid approach where the robot can act as both a passive sensor—recording the patient’s unassisted movements—and an active guide, gently applying corrective force when the patient’s motion deviates from the prescribed trajectory (Phan et al., 2014).

To motivate the patient, we developed a “self-managed patient-game interaction” system called MAGNI (Lioulemes et al., 2015). In a custom 3D video game, the patient controls the robotic arm to complete tasks (e.g., popping balloons), which correspond to their prescribed therapy exercises. The system analyzes the motion trajectories to classify the exercises and measure compliance, providing a platform for dynamic, adaptive, and self-managed therapy.

A Multilevel Framework for Human Motion Analysis

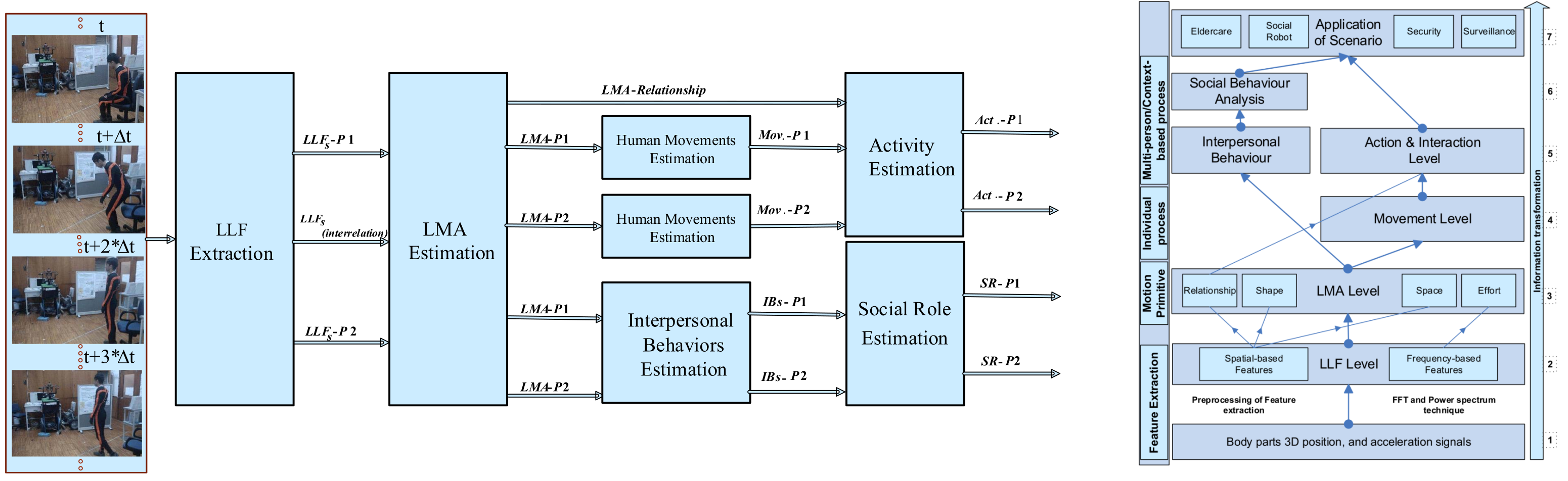

A fundamental challenge in this work is making sense of the complex, high-dimensional motion data captured by these systems. To address this, we proposed a multilevel, body-motion-based methodology for human activity analysis (Khoshhal Roudposhti et al., 2017).

This general framework, inspired by Laban Movement Analysis, models human activity on multiple levels of abstraction. It starts with low-level features from sensor data (e.g., acceleration of body parts) and uses them to estimate mid-level motion descriptors (e.g., Effort, Shape). These descriptors, in turn, are used to classify high-level activities and even social behaviors. This structured, probabilistic approach provides a powerful and descriptive way to analyze and understand human movement, forming the analytical backbone for our rehabilitation systems.