Advanced Methodologies for Time-Series Analysis

A comprehensive research program on deep learning for time-series, focusing on architectural innovation, novel data representations, temporal context, interpretability, and robust evaluation.

Time-series data is at the heart of many critical applications, from Human Activity Recognition (HAR) using wearable sensors to the analysis of complex physiological signals. However, effectively modeling this data presents unique challenges, including capturing long-range temporal dependencies, fusing multimodal data streams, interpreting “black box” models, and ensuring that model evaluation is scientifically robust.

This project represents a comprehensive research program dedicated to advancing the state-of-the-art in time-series analysis. Our work explores multiple interconnected thrusts: innovating on network architectures, developing novel data representations, enhancing models with temporal context and interpretability, and establishing rigorous frameworks for evaluation.

Architectural Innovations for Temporal Data

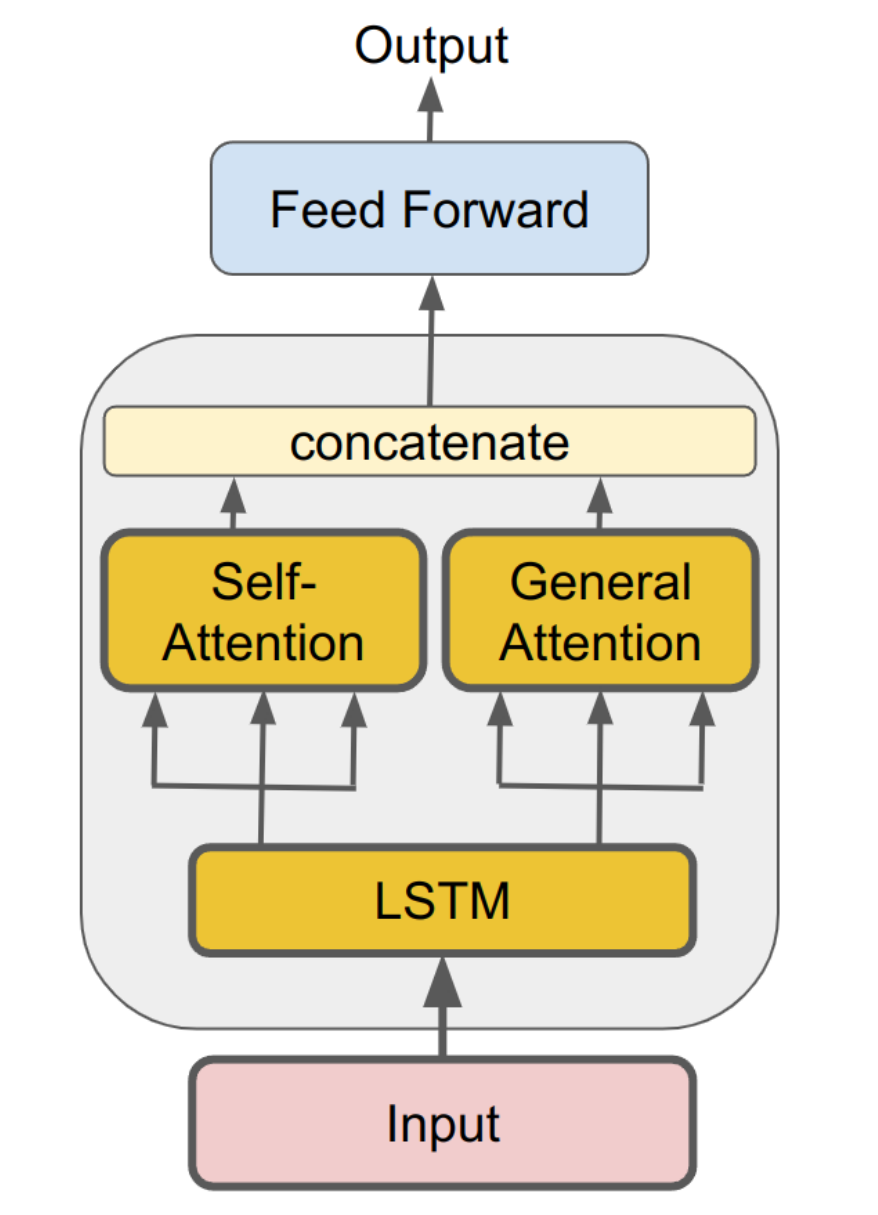

While Transformers have become dominant in many domains, we’ve shown that for continuous, temporal data, hybrid models combining the strengths of recurrence and attention often yield superior results. Our work in (Katrompas et al., 2022) demonstrates that an LSTM combined with self-attention can outperform a pure Transformer architecture for time-series classification.

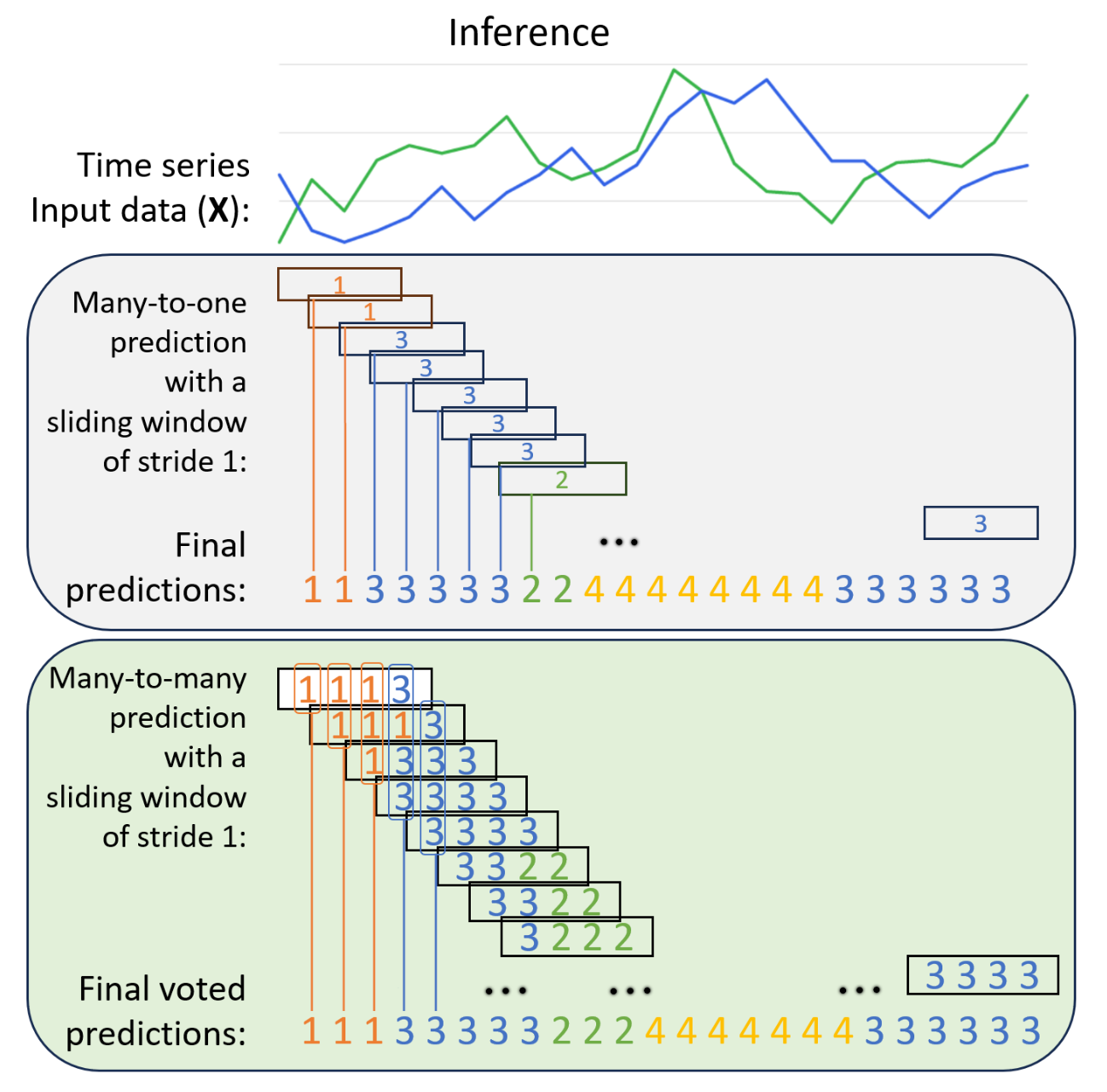

Building on this, we developed a novel many-to-many prediction model to effectively handle data with frequent label transitions, a common challenge in gesture recognition. Unlike traditional many-to-one models that assign a single label to an entire window, our model predicts a label for every time-step and uses a novel voting scheme to consolidate the output, allowing it to capture rapid changes that would otherwise be missed (Katrompas & Metsis, 2024).

Novel Data Representations: Fusion and Topology

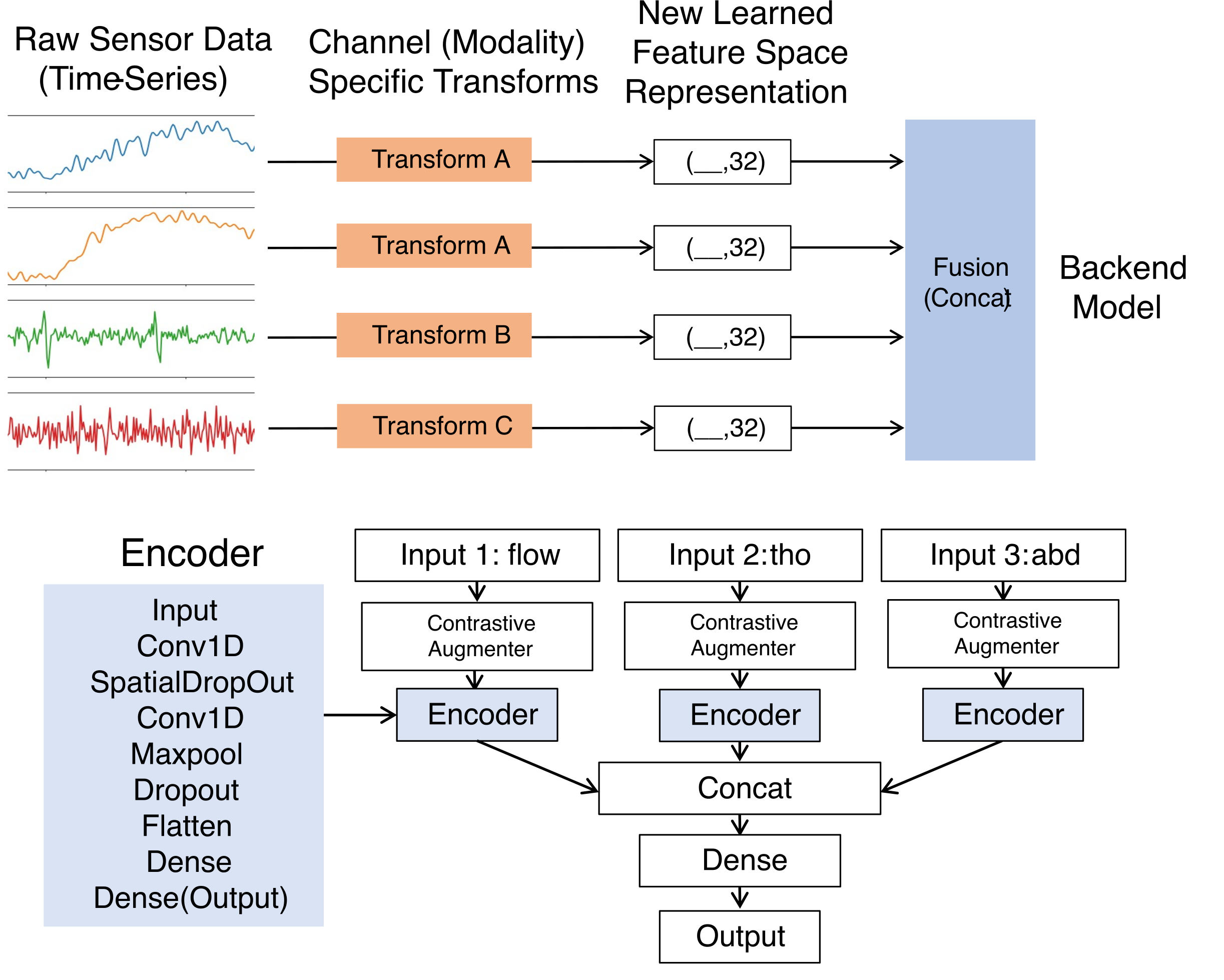

How data is represented before it even reaches a model is crucial. For multimodal data, where sensor streams are physically dissimilar, we developed a late-fusion architecture (Hinkle & Metsis, 2022), (Hinkle et al., 2023). Each sensor channel is processed independently by a dedicated feature encoder, which can be pre-trained on unlabeled data using self-supervised learning. The resulting learned representations are then fused for final classification, allowing the model to weigh the importance of each modality automatically.

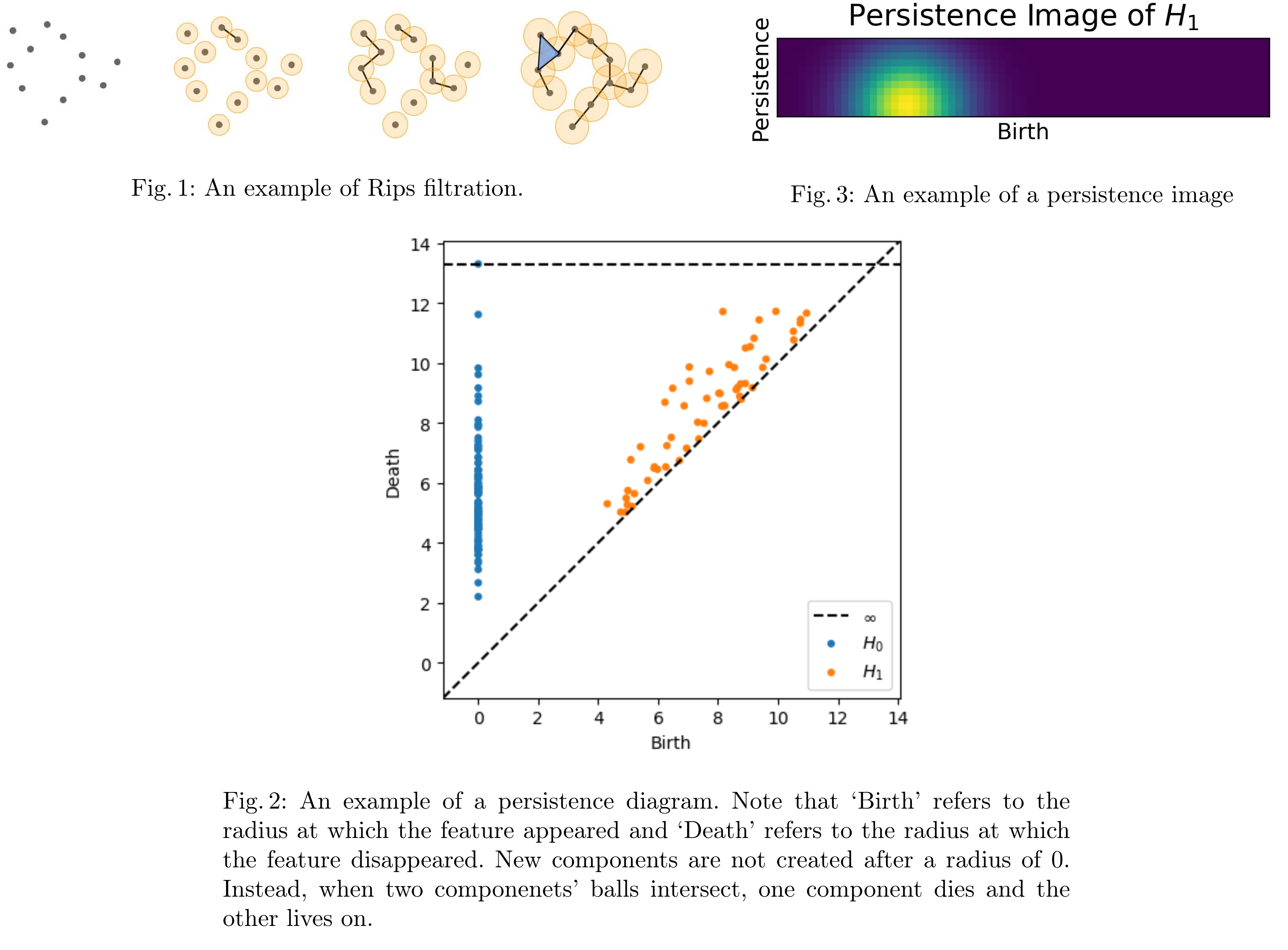

We also explored an entirely new way of representing time-series data using Topological Data Analysis (TDA). Our method (Byers et al., 2022) transforms a raw time-series into a “persistence image”—a stable, vector-based representation of the signal’s underlying topological shape. This TDA embedding acts as a powerful low-level feature extractor, improving performance especially on imbalanced classes with limited training data.

Temporal Context and Interpretability

Conventional models often analyze time-series windows in isolation. To address this, we introduced a lightweight Bayesian meta-classification approach that models the transition probabilities between labels of adjacent windows (Irani & Metsis, 2024). By considering the predictions of preceding windows, this method refines the current prediction, enhancing accuracy by incorporating temporal context.

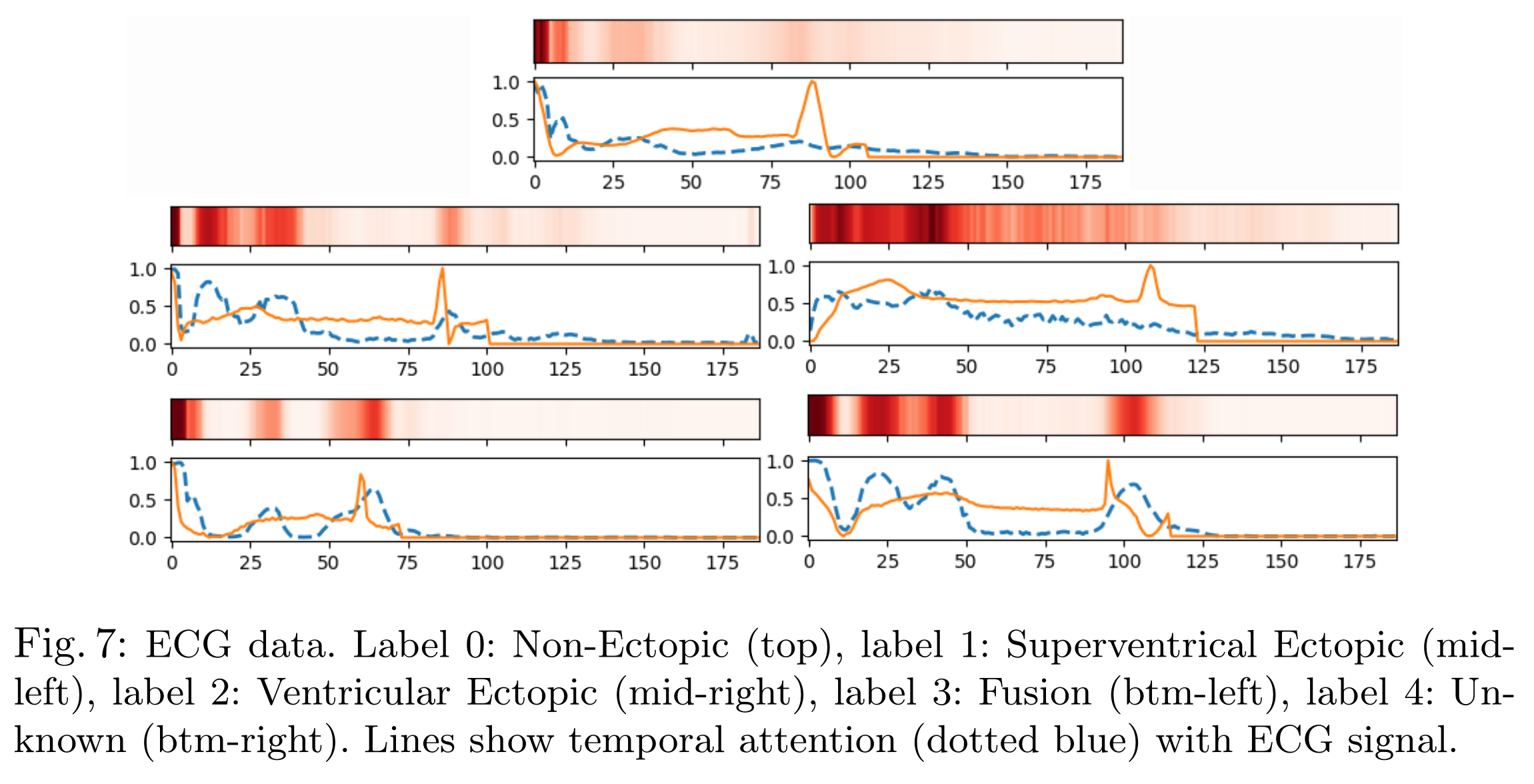

To build trust and understanding of our models, we developed Temporal Attention Signatures (Katrompas & Metsis, 2023). Akin to attention heatmaps in computer vision, these signatures provide a visualization of which time-steps in a sequence the model focuses on most when making a prediction. This technique offers powerful insights for model validation, interpretation, and even hyperparameter tuning, such as selecting the optimal sequence length.

A Framework for Robust and Unified Analysis

Underpinning all this research is the need for rigorous and reproducible evaluation. We highlighted the critical flaw of using standard stratified cross-validation for subject-based time-series, which leads to data leakage and overly optimistic results, advocating for strict subject-independent evaluation (Hinkle & Metsis, 2021).

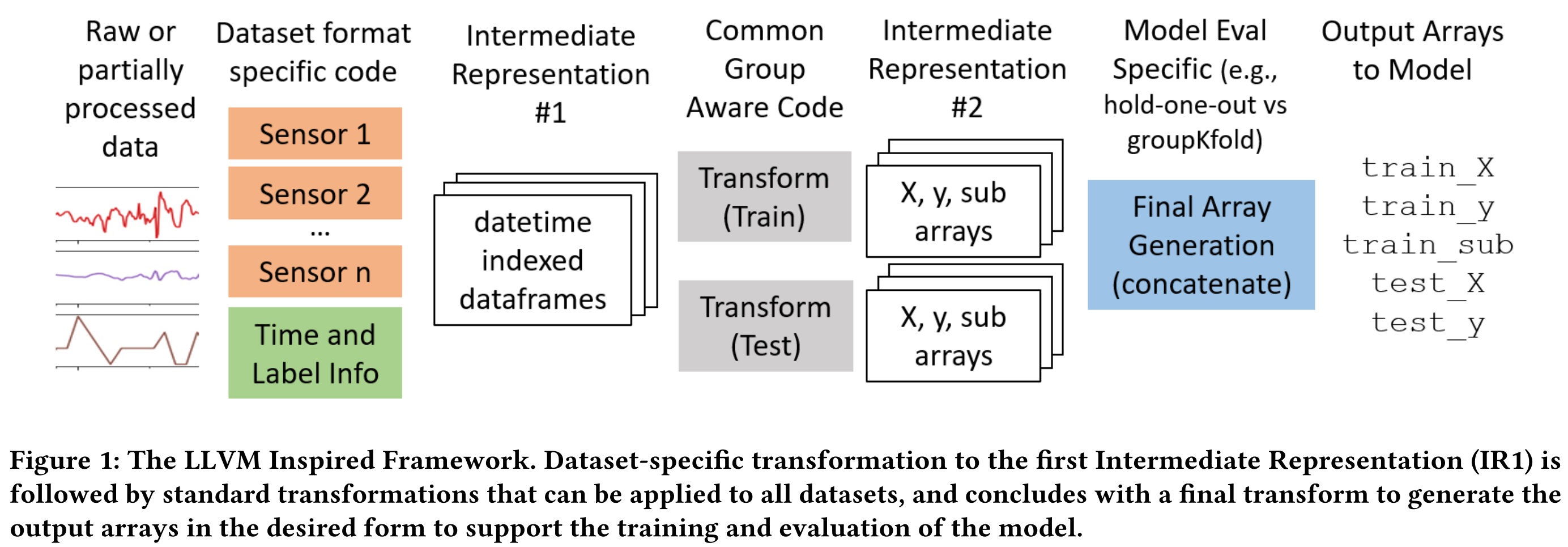

To manage the complexity of working with numerous diverse datasets, we developed an LLVM-inspired software framework (Hinkle & Metsis, 2023). This framework defines standardized intermediate representations for time-series data, allowing for a streamlined and reusable pipeline that converts heterogeneous raw datasets into a unified format ready for robust, subject-aware model training and evaluation.